Something as simple as changing the font of a message or cropping an image can be all it takes to bypass Facebook's defenses against hoaxes and lies.

A new analysis by the international advocacy group Avaaz shines light on why, despite the tech giant's efforts to stamp out misinformation about the coronavirus pandemic and the U.S. election, it's so hard to stop bad actors from spreading these falsehoods.

"We found them getting around Facebook's policies by just tweaking the misinformation a little bit, and it was still going viral," said Fadi Quran, campaign director at Avaaz.

Facebook relies on both human reviewers and artificial intelligence to catch posts that break its rules. But Avaaz's analysis suggests that net has holes through which false claims proliferate.

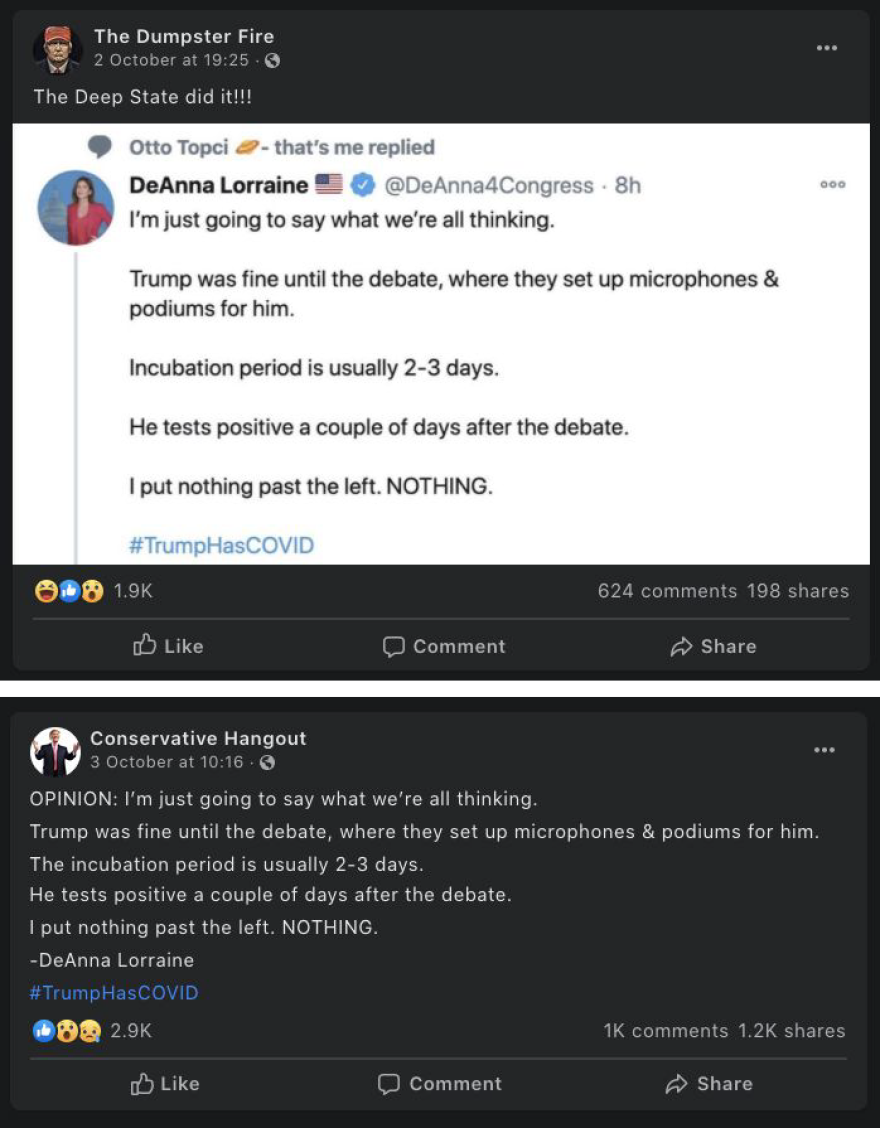

In one example the group found, users shared a screenshot of a tweet claiming, falsely, that President Trump was deliberately infected with COVID-19 by "the left." Facebook overlaid a warning label on some versions of the post, saying independent fact-checkers determined it was "false information," and linking to a USA Today article debunking the claim.

But Facebook did not apply the same label to versions of the message that were nearly identical, except with different backgrounds or croppings.

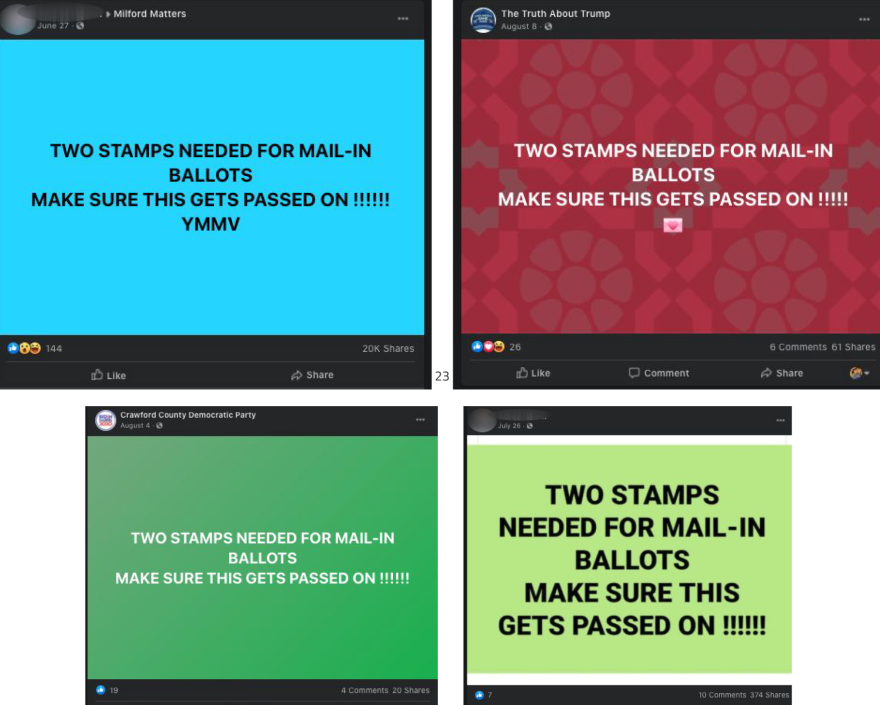

A meme claiming mail-in ballots need two stamps was labeled "partly false." But Facebook did not label other versions of the same message — versions in which the user had changed the font or background color, or written out the text of the meme instead of posting it as an image. One of those unlabeled posts was shared 20,000 times.

Avaaz found similar patterns with posts covering a range of falsehoods. Some meant to disparage former Vice President Joe Biden; others to link President Trump with white supremacists; and still others promoted a false cure for the coronavirus, where none exists.

Facebook told NPR that Avaaz's findings "don't accurately reflect the actions we've taken." It said it has carried out enforcement actions against the majority of the pages and groups Avaaz identified, such as reducing the distribution of their posts, not recommending that users join them, and barring them from monetizing or advertising. Facebook imposes such penalties on pages that repeatedly share false information.

Facebook has policies against posting misinformation and has cracked down this year on hoaxes and false claims about COVID-19 and voting, amid growing concerns over the real-world harm that can stem from these messages' spread on social media.

The company relies on independent fact-checkers, including PolitiFact, Reuters and the AP, to rate the accuracy of claims. If fact-checkers find misinformation, Facebook puts a warning label on the post, alerts users before they share it and notifies people who have already shared it.

It also reduces the post's distribution by, for example, not showing it near the top of a user's News Feed. The company says it uses artificial intelligence to detect copies of misinformation it has identified and apply labels to them, too. Between March and September of this year, it said, it has applied warning labels to more than 150 million pieces of content that were debunked by fact-checkers.

"We remain the only company to partner with more than 70 fact-checking organizations, using AI to scale their fact-checks to millions of duplicate posts, and we are working to improve our ability to action on similar posts," Facebook said.

Facebook failed to label 42% of posts with debunked claims

But Avaaz found the cat-and-mouse game alive and well. The group examined 1,776 posts — mainly memes, photos and status updates — that Facebook's independent fact-checking partners had found false or misleading, between October 2019 and early August 2020.

Of those posts, 738, or nearly 42%, were not labeled, even though they contained claims that had been debunked. Using data from CrowdTangle, a research tool that Facebook owns, Avaaz estimated the unlabeled posts it found had been viewed 142 million times and gotten 5.6 million reactions such as likes, comments or shares.

It appears as though there are orchestrated efforts to spread many of these falsehoods. Avaaz identified 119 pages upon which misinformation had been posted at least three times in the past year. Of those, 46 pages had shared unlabeled versions of posts the fact-checkers had deemed false.

Quran said the company may not be catching all these repeat offenders if it is not consistently labeling fact-checked content.

"Once they see a piece of misinformation that is hitting a nerve in the U.S. and going viral, even when it's flagged by Facebook, these pages will take it, flip it, edit it a little bit and then circumvent Facebook's system," Quran said. "[They] continue to build up their network and reach more and more people and amplify these lies, without being stopped by the platform."

Editor's note: Facebook is among NPR's financial supporters.

Copyright 2021 NPR. To see more, visit https://www.npr.org.